Reposting pull request for #22465

> Migration from GitBucket does not work due to a access for "Reviews"

API on GitBucket that makes 404 response. This PR has following changes.

> 1. Made to stop access for Reviews API while migrating from GitBucket.

> 2. Added support for custom URL (e.g.

`http://example.com/gitbucket/owner/repository`)

> 3. Made to accept for git checkout URL

(`http://example.com/git/owner/repository.git`)

Co-authored-by: zeripath <art27@cantab.net>

Addition to #22056

This PR adds a hint to mail text if replies are supported.

I can't tell if the text structure is supported in every language. Maybe

we need to put the whole line in the translation file and use

parameters.

This PR adds a task to the cron service to allow garbage collection of

LFS meta objects. As repositories may have a large number of

LFSMetaObjects, an updated column is added to this table and it is used

to perform a generational GC to attempt to reduce the amount of work.

(There may need to be a bit more work here but this is probably enough

for the moment.)

Fix#7045

Signed-off-by: Andrew Thornton <art27@cantab.net>

As suggest by Go developers, use `filepath.WalkDir` instead of

`filepath.Walk` because [*Walk is less efficient than WalkDir,

introduced in Go 1.16, which avoids calling `os.Lstat` on every file or

directory visited](https://pkg.go.dev/path/filepath#Walk).

This proposition address that, in a similar way as

https://github.com/go-gitea/gitea/pull/22392 did.

Co-authored-by: zeripath <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

This PR introduce glob match for protected branch name. The separator is

`/` and you can use `*` matching non-separator chars and use `**` across

separator.

It also supports input an exist or non-exist branch name as matching

condition and branch name condition has high priority than glob rule.

Should fix#2529 and #15705

screenshots

<img width="1160" alt="image"

src="https://user-images.githubusercontent.com/81045/205651179-ebb5492a-4ade-4bb4-a13c-965e8c927063.png">

Co-authored-by: zeripath <art27@cantab.net>

closes#13585fixes#9067fixes#2386

ref #6226

ref #6219fixes#745

This PR adds support to process incoming emails to perform actions.

Currently I added handling of replies and unsubscribing from

issues/pulls. In contrast to #13585 the IMAP IDLE command is used

instead of polling which results (in my opinion 😉) in cleaner code.

Procedure:

- When sending an issue/pull reply email, a token is generated which is

present in the Reply-To and References header.

- IMAP IDLE waits until a new email arrives

- The token tells which action should be performed

A possible signature and/or reply gets stripped from the content.

I added a new service to the drone pipeline to test the receiving of

incoming mails. If we keep this in, we may test our outgoing emails too

in future.

Co-authored-by: silverwind <me@silverwind.io>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

The current code propagates all errors up to the iteration step meaning

that a single malformed repo will prevent GC of other repos.

This PR simply stops that propagation.

Fix#21605

Signed-off-by: Andrew Thornton <art27@cantab.net>

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: John Olheiser <john.olheiser@gmail.com>

We can use `:=` to make `err` local to the if-scope instead of

overwriting the `err` in outer scope.

Signed-off-by: jolheiser <john.olheiser@gmail.com>

- Move the file `compare.go` and `slice.go` to `slice.go`.

- Fix `ExistsInSlice`, it's buggy

- It uses `sort.Search`, so it assumes that the input slice is sorted.

- It passes `func(i int) bool { return slice[i] == target })` to

`sort.Search`, that's incorrect, check the doc of `sort.Search`.

- Conbine `IsInt64InSlice(int64, []int64)` and `ExistsInSlice(string,

[]string)` to `SliceContains[T]([]T, T)`.

- Conbine `IsSliceInt64Eq([]int64, []int64)` and `IsEqualSlice([]string,

[]string)` to `SliceSortedEqual[T]([]T, T)`.

- Add `SliceEqual[T]([]T, T)` as a distinction from

`SliceSortedEqual[T]([]T, T)`.

- Redesign `RemoveIDFromList([]int64, int64) ([]int64, bool)` to

`SliceRemoveAll[T]([]T, T) []T`.

- Add `SliceContainsFunc[T]([]T, func(T) bool)` and

`SliceRemoveAllFunc[T]([]T, func(T) bool)` for general use.

- Add comments to explain why not `golang.org/x/exp/slices`.

- Add unit tests.

Fixes#22391

This field is optional for Discord, however when it exists in the

payload it is now validated.

Omitting it entirely just makes Discord use the default for that

webhook, which is set on the Discord side.

Signed-off-by: jolheiser <john.olheiser@gmail.com>

After #22362, we can feel free to use transactions without

`db.DefaultContext`.

And there are still lots of models using `db.DefaultContext`, I think we

should refactor them carefully and one by one.

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

- Unify the hashing code for repository and user avatars into a

function.

- Use a sane hash function instead of MD5.

- Only require hashing once instead of twice(w.r.t. hashing for user

avatar).

- Improve the comment for the hashing code of why it works.

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: Yarden Shoham <hrsi88@gmail.com>

Previously, there was an `import services/webhooks` inside

`modules/notification/webhook`.

This import was removed (after fighting against many import cycles).

Additionally, `modules/notification/webhook` was moved to

`modules/webhook`,

and a few structs/constants were extracted from `models/webhooks` to

`modules/webhook`.

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

This PR changed the Auth interface signature from

`Verify(http *http.Request, w http.ResponseWriter, store DataStore, sess

SessionStore) *user_model.User`

to

`Verify(http *http.Request, w http.ResponseWriter, store DataStore, sess

SessionStore) (*user_model.User, error)`.

There is a new return argument `error` which means the verification

condition matched but verify process failed, we should stop the auth

process.

Before this PR, when return a `nil` user, we don't know the reason why

it returned `nil`. If the match condition is not satisfied or it

verified failure? For these two different results, we should have

different handler. If the match condition is not satisfied, we should

try next auth method and if there is no more auth method, it's an

anonymous user. If the condition matched but verify failed, the auth

process should be stop and return immediately.

This will fix#20563

Co-authored-by: KN4CK3R <admin@oldschoolhack.me>

Co-authored-by: Jason Song <i@wolfogre.com>

If user has reached the maximum limit of repositories:

- Before

- disallow create

- allow fork without limit

- This patch:

- disallow create

- disallow fork

- Add option `ALLOW_FORK_WITHOUT_MAXIMUM_LIMIT` (Default **true**) :

enable this allow user fork repositories without maximum number limit

fixed https://github.com/go-gitea/gitea/issues/21847

Signed-off-by: Xinyu Zhou <i@sourcehut.net>

Some dbs require that all tables have primary keys, see

- #16802

- #21086

We can add a test to keep it from being broken again.

Edit:

~Added missing primary key for `ForeignReference`~ Dropped the

`ForeignReference` table to satisfy the check, so it closes#21086.

More context can be found in comments.

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: zeripath <art27@cantab.net>

For a long time Gitea has tested PR patches using a git apply --check

method, and in fact prior to the introduction of a read-tree assisted

three-way merge in #18004, this was the only way of checking patches.

Since #18004, the git apply --check method has been a fallback method,

only used when the read-tree three-way merge method has detected a

conflict. The read-tree assisted three-way merge method is much faster

and less resource intensive method of detecting conflicts. #18004 kept

the git apply method around because it was thought possible that this

fallback might be able to rectify conflicts that the read-tree three-way

merge detected. I am not certain if this could ever be the case.

Given the uncertainty here and the now relative stability of the

read-tree method - this PR makes using this fallback optional and

disables it by default. The hope is that users will not notice any

significant difference in conflict detection and we will be able to

remove the git apply fallback in future, and/or improve the read-tree

three-way merge method to catch any conflicts that git apply method

might have been able to fix.

An additional benefit is that patch checking should be significantly

less resource intensive and much quicker.

(See

https://github.com/go-gitea/gitea/issues/22083\#issuecomment-1347961737)

Ref #22083

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: KN4CK3R <admin@oldschoolhack.me>

This fixes a bug where, when searching unadopted repositories, active

repositories will be listed as well. This is because the size of the

array of repository names to check is larger by one than the

`IterateBufferSize`.

For an `IterateBufferSize` of 50, the original code will pass 51

repository names but set the query to `LIMIT 50`. If all repositories in

the query are active (i.e. not unadopted) one of them will be omitted

from the result. Due to the `ORDER BY` clause it will be the oldest (or

least recently modified) one.

Bug found in 1.17.3.

Co-authored-by: zeripath <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

The recent PR adding orphaned checks to the LFS storage is not

sufficient to completely GC LFS, as it is possible for LFSMetaObjects to

remain associated with repos but still need to be garbage collected.

Imagine a situation where a branch is uploaded containing LFS files but

that branch is later completely deleted. The LFSMetaObjects will remain

associated with the Repository but the Repository will no longer contain

any pointers to the object.

This PR adds a second doctor command to perform a full GC.

Signed-off-by: Andrew Thornton <art27@cantab.net>

Moved files in a patch will result in git apply returning:

```

error: {filename}: No such file or directory

```

This wasn't handled by the git apply patch code. This PR adds handling

for this.

Fix#22083

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

Close#14601Fix#3690

Revive of #14601.

Updated to current code, cleanup and added more read/write checks.

Signed-off-by: Andrew Thornton <art27@cantab.net>

Signed-off-by: Andre Bruch <ab@andrebruch.com>

Co-authored-by: zeripath <art27@cantab.net>

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: Norwin <git@nroo.de>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

When deleting a closed issue, we should update both `NumIssues`and

`NumClosedIssues`, or `NumOpenIssues`(`= NumIssues -NumClosedIssues`)

will be wrong. It's the same for pull requests.

Releated to #21557.

Alse fixed two harmless problems:

- The SQL to check issue/PR total numbers is wrong, that means it will

update the numbers even if they are correct.

- Replace legacy `num_issues = num_issues + 1` operations with

`UpdateRepoIssueNumbers`.

`hex.EncodeToString` has better performance than `fmt.Sprintf("%x",

[]byte)`, we should use it as much as possible.

I'm not an extreme fan of performance, so I think there are some

exceptions:

- `fmt.Sprintf("%x", func(...)[N]byte())`

- We can't slice the function return value directly, and it's not worth

adding lines.

```diff

func A()[20]byte { ... }

- a := fmt.Sprintf("%x", A())

- a := hex.EncodeToString(A()[:]) // invalid

+ tmp := A()

+ a := hex.EncodeToString(tmp[:])

```

- `fmt.Sprintf("%X", []byte)`

- `strings.ToUpper(hex.EncodeToString(bytes))` has even worse

performance.

Change all license headers to comply with REUSE specification.

Fix#16132

Co-authored-by: flynnnnnnnnnn <flynnnnnnnnnn@github>

Co-authored-by: John Olheiser <john.olheiser@gmail.com>

Unfortunately the fallback configuration code for [mailer] that were

added in #18982 are incorrect. When you read a value from an ini section

that key is added. This leads to a failure of the fallback mechanism.

Further there is also a spelling mistake in the startTLS configuration.

This PR restructures the mailer code to first map the deprecated

settings on to the new ones - and then use ini.MapTo to map those on to

the struct with additional validation as necessary.

Ref #21744

Signed-off-by: Andrew Thornton <art27@cantab.net>

When re-retrieving hook tasks from the DB double check if they have not

been delivered in the meantime. Further ensure that tasks are marked as

delivered when they are being delivered.

In addition:

* Improve the error reporting and make sure that the webhook task

population script runs in a separate goroutine.

* Only get hook task IDs out of the DB instead of the whole task when

repopulating the queue

* When repopulating the queue make the DB request paged

Ref #17940

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Enable this to require captcha validation for user login. You also must

enable `ENABLE_CAPTCHA`.

Summary:

- Consolidate CAPTCHA template

- add CAPTCHA handle and context

- add `REQUIRE_CAPTCHA_FOR_LOGIN` config and docs

- Consolidate CAPTCHA set-up and verification code

Partially resolved#6049

Signed-off-by: Xinyu Zhou <i@sourcehut.net>

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: Andrew Thornton <art27@cantab.net>

Fix#20456

At some point during the 1.17 cycle abbreviated refishs to issue

branches started breaking. This is likely due serious inconsistencies in

our management of refs throughout Gitea - which is a bug needing to be

addressed in a different PR. (Likely more than one)

We should try to use non-abbreviated `fullref`s as much as possible.

That is where a user has inputted a abbreviated `refish` we should add

`refs/heads/` if it is `branch` etc. I know people keep writing and

merging PRs that remove prefixes from stored content but it is just

wrong and it keeps causing problems like this. We should only remove the

prefix at the time of

presentation as the prefix is the only way of knowing umambiguously and

permanently if the `ref` is referring to a `branch`, `tag` or `commit` /

`SHA`. We need to make it so that every ref has the appropriate prefix,

and probably also need to come up with some definitely unambiguous way

of storing `SHA`s if they're used in a `ref` or `refish` field. We must

not store a potentially

ambiguous `refish` as a `ref`. (Especially when referring a `tag` -

there is no reason why users cannot create a `branch` with the same

short name as a `tag` and vice versa and any attempt to prevent this

will fail. You can even create a `branch` and a

`tag` that matches the `SHA` pattern.)

To that end in order to fix this bug, when parsing issue templates check

the provided `Ref` (here a `refish` because almost all users do not know

or understand the subtly), if it does not start with `refs/` add the

`BranchPrefix` to it. This allows people to make their templates refer

to a `tag` but not to a `SHA` directly. (I don't think that is

particularly unreasonable but if people disagree I can make the `refish`

be checked to see if it matches the `SHA` pattern.)

Next we need to handle the issue links that are already written. The

links here are created with `git.RefURL`

Here we see there is a bug introduced in #17551 whereby the provided

`ref` argument can be double-escaped so we remove the incorrect external

escape. (The escape added in #17551 is in the right place -

unfortunately I missed that the calling function was doing the wrong

thing.)

Then within `RefURL()` we check if an unprefixed `ref` (therefore

potentially a `refish`) matches the `SHA` pattern before assuming that

is actually a `commit` - otherwise is assumed to be a `branch`. This

will handle most of the problem cases excepting the very unusual cases

where someone has deliberately written a `branch` to look like a `SHA1`.

But please if something is called a `ref` or interpreted as a `ref` make

it a full-ref before storing or using it. By all means if something is a

`branch` assume the prefix is removed but always add it back in if you

are using it as a `ref`. Stop storing abbreviated `branch` names and

`tag` names - which are `refish` as a `ref`. It will keep on causing

problems like this.

Fix#20456

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: Lauris BH <lauris@nix.lv>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

This PR adds a context parameter to a bunch of methods. Some helper

`xxxCtx()` methods got replaced with the normal name now.

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

GitHub migration tests will be skipped if the secret for the GitHub API

token hasn't been set.

This change should make all tests pass (or skip in the case of this one)

for anyone running the pipeline on their own infrastructure without

further action on their part.

Resolves https://github.com/go-gitea/gitea/issues/21739

Signed-off-by: Gary Moon <gary@garymoon.net>

The `getPullRequestPayloadInfo` function is widely used in many webhook,

it works well when PR is open or edit. But when we comment in PR review

panel (not PR panel), the comment content is not set as

`attachmentText`.

This commit set comment content as `attachmentText` when PR review, so

webhook could obtain this information via this function.

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

Fix#19513

This PR introduce a new db method `InTransaction(context.Context)`,

and also builtin check on `db.TxContext` and `db.WithTx`.

There is also a new method `db.AutoTx` has been introduced but could be used by other PRs.

`WithTx` will always open a new transaction, if a transaction exist in context, return an error.

`AutoTx` will try to open a new transaction if no transaction exist in context.

That means it will always enter a transaction if there is no error.

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: 6543 <6543@obermui.de>

The purpose of #18982 is to improve the SMTP mailer, but there were some

unrelated changes made to the SMTP auth in

d60c438694

This PR reverts these unrelated changes, fix#21744

Related #20471

This PR adds global quota limits for the package registry. Settings for

individual users/orgs can be added in a seperate PR using the settings

table.

Co-authored-by: Lauris BH <lauris@nix.lv>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Close https://github.com/go-gitea/gitea/issues/21640

Before: Gitea can create users like ".xxx" or "x..y", which is not

ideal, it's already a consensus that dot filenames have special

meanings, and `a..b` is a confusing name when doing cross repo compare.

After: stricter

Co-authored-by: Jason Song <i@wolfogre.com>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: delvh <dev.lh@web.de>

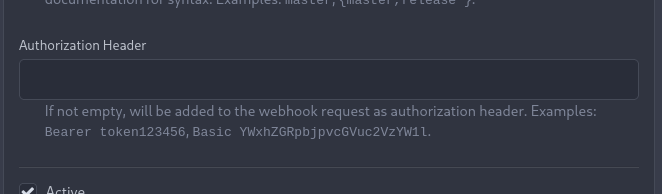

_This is a different approach to #20267, I took the liberty of adapting

some parts, see below_

## Context

In some cases, a weebhook endpoint requires some kind of authentication.

The usual way is by sending a static `Authorization` header, with a

given token. For instance:

- Matrix expects a `Bearer <token>` (already implemented, by storing the

header cleartext in the metadata - which is buggy on retry #19872)

- TeamCity #18667

- Gitea instances #20267

- SourceHut https://man.sr.ht/graphql.md#authentication-strategies (this

is my actual personal need :)

## Proposed solution

Add a dedicated encrypt column to the webhook table (instead of storing

it as meta as proposed in #20267), so that it gets available for all

present and future hook types (especially the custom ones #19307).

This would also solve the buggy matrix retry #19872.

As a first step, I would recommend focusing on the backend logic and

improve the frontend at a later stage. For now the UI is a simple

`Authorization` field (which could be later customized with `Bearer` and

`Basic` switches):

The header name is hard-coded, since I couldn't fine any usecase

justifying otherwise.

## Questions

- What do you think of this approach? @justusbunsi @Gusted @silverwind

- ~~How are the migrations generated? Do I have to manually create a new

file, or is there a command for that?~~

- ~~I started adding it to the API: should I complete it or should I

drop it? (I don't know how much the API is actually used)~~

## Done as well:

- add a migration for the existing matrix webhooks and remove the

`Authorization` logic there

_Closes #19872_

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: Gusted <williamzijl7@hotmail.com>

Co-authored-by: delvh <dev.lh@web.de>

I found myself wondering whether a PR I scheduled for automerge was

actually merged. It was, but I didn't receive a mail notification for it

- that makes sense considering I am the doer and usually don't want to

receive such notifications. But ideally I want to receive a notification

when a PR was merged because I scheduled it for automerge.

This PR implements exactly that.

The implementation works, but I wonder if there's a way to avoid passing

the "This PR was automerged" state down so much. I tried solving this

via the database (checking if there's an automerge scheduled for this PR

when sending the notification) but that did not work reliably, probably

because sending the notification happens async and the entry might have

already been deleted. My implementation might be the most

straightforward but maybe not the most elegant.

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: Lauris BH <lauris@nix.lv>

Co-authored-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

GitHub allows releases with target commitish `refs/heads/BRANCH`, which

then causes issues in Gitea after migration. This fix handles cases that

a branch already has a prefix.

Fixes#20317

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

A bug was introduced in #17865 where filepath.Join is used to join

putative unadopted repository owner and names together. This is

incorrect as these names are then used as repository names - which shoud

have the '/' separator. This means that adoption will not work on

Windows servers.

Fix#21632

Signed-off-by: Andrew Thornton <art27@cantab.net>

The OAuth spec [defines two types of

client](https://datatracker.ietf.org/doc/html/rfc6749#section-2.1),

confidential and public. Previously Gitea assumed all clients to be

confidential.

> OAuth defines two client types, based on their ability to authenticate

securely with the authorization server (i.e., ability to

> maintain the confidentiality of their client credentials):

>

> confidential

> Clients capable of maintaining the confidentiality of their

credentials (e.g., client implemented on a secure server with

> restricted access to the client credentials), or capable of secure

client authentication using other means.

>

> **public

> Clients incapable of maintaining the confidentiality of their

credentials (e.g., clients executing on the device used by the resource

owner, such as an installed native application or a web browser-based

application), and incapable of secure client authentication via any

other means.**

>

> The client type designation is based on the authorization server's

definition of secure authentication and its acceptable exposure levels

of client credentials. The authorization server SHOULD NOT make

assumptions about the client type.

https://datatracker.ietf.org/doc/html/rfc8252#section-8.4

> Authorization servers MUST record the client type in the client

registration details in order to identify and process requests

accordingly.

Require PKCE for public clients:

https://datatracker.ietf.org/doc/html/rfc8252#section-8.1

> Authorization servers SHOULD reject authorization requests from native

apps that don't use PKCE by returning an error message

Fixes#21299

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Previously mentioning a user would link to its profile, regardless of

whether the user existed. This change tests if the user exists and only

if it does - a link to its profile is added.

* Fixes#3444

Signed-off-by: Yarden Shoham <hrsi88@gmail.com>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

When actions besides "delete" are performed on issues, the milestone

counter is updated. However, since deleting issues goes through a

different code path, the associated milestone's count wasn't being

updated, resulting in inaccurate counts until another issue in the same

milestone had a non-delete action performed on it.

I verified this change fixes the inaccurate counts using a local docker

build.

Fixes#21254

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

At the moment a repository reference is needed for webhooks. With the

upcoming package PR we need to send webhooks without a repository

reference. For example a package is uploaded to an organization. In

theory this enables the usage of webhooks for future user actions.

This PR removes the repository id from `HookTask` and changes how the

hooks are processed (see `services/webhook/deliver.go`). In a follow up

PR I want to remove the usage of the `UniqueQueue´ and replace it with a

normal queue because there is no reason to be unique.

Co-authored-by: 6543 <6543@obermui.de>

When a PR reviewer reviewed a file on a commit that was later gc'ed,

they would always get a `500` response from then on when loading the PR.

This PR simply ignores that error and instead marks all files as

unchanged.

This approach was chosen as the only feasible option without diving into

**a lot** of error handling.

Fixes#21392

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

A lot of our code is repeatedly testing if individual errors are

specific types of Not Exist errors. This is repetitative and unnecesary.

`Unwrap() error` provides a common way of labelling an error as a

NotExist error and we can/should use this.

This PR has chosen to use the common `io/fs` errors e.g.

`fs.ErrNotExist` for our errors. This is in some ways not completely

correct as these are not filesystem errors but it seems like a

reasonable thing to do and would allow us to simplify a lot of our code

to `errors.Is(err, fs.ErrNotExist)` instead of

`package.IsErr...NotExist(err)`

I am open to suggestions to use a different base error - perhaps

`models/db.ErrNotExist` if that would be felt to be better.

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: delvh <dev.lh@web.de>

For normal commits the notification url was wrong because oldCommitID is received from the shrinked commits list.

This PR moves the commits list shrinking after the oldCommitID assignment.

Fixes#21379

The commits are capped by `setting.UI.FeedMaxCommitNum` so

`len(commits)` is not the correct number. So this PR adds a new

`TotalCommits` field.

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

When merge was changed to run in the background context, the db updates

were still running in request context. This means that the merge could

be successful but the db not be updated.

This PR changes both these to run in the hammer context, this is not

complete rollback protection but it's much better.

Fix#21332

Signed-off-by: Andrew Thornton <art27@cantab.net>

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

We should only log CheckPath errors if they are not simply due to

context cancellation - and we should add a little more context to the

error message.

Fix#20709

Signed-off-by: Andrew Thornton <art27@cantab.net>

Close#20315 (fix the panic when parsing invalid input), Speed up #20231 (use ls-tree without size field)

Introduce ListEntriesRecursiveFast (ls-tree without size) and ListEntriesRecursiveWithSize (ls-tree with size)

Only load SECRET_KEY and INTERNAL_TOKEN if they exist.

Never write the config file if the keys do not exist, which was only a fallback for Gitea upgraded from < 1.5

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

This is useful in scenarios where the reverse proxy may have knowledge

of user emails, but does not know about usernames set on gitea,

as in the feature request in #19948.

I tested this by setting up a fresh gitea install with one user `mhl`

and email `m.hasnain.lakhani@gmail.com`. I then created a private repo,

and configured gitea to allow reverse proxy authentication.

Via curl I confirmed that these two requests now work and return 200s:

curl http://localhost:3000/mhl/private -I --header "X-Webauth-User: mhl"

curl http://localhost:3000/mhl/private -I --header "X-Webauth-Email: m.hasnain.lakhani@gmail.com"

Before this commit, the second request did not work.

I also verified that if I provide an invalid email or user,

a 404 is correctly returned as before

Closes#19948

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: 6543 <6543@obermui.de>

The behaviour of `PreventSurroundingPre` has changed in

https://github.com/alecthomas/chroma/pull/618 so that apparently it now

causes line wrapper tags to be no longer emitted, but we need some form

of indication to split the HTML into lines, so I did what

https://github.com/yuin/goldmark-highlighting/pull/33 did and added the

`nopWrapper`.

Maybe there are more elegant solutions but for some reason, just

splitting the HTML string on `\n` did not work.

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Both allow only limited characters. If you input more, you will get a error

message. So it make sense to limit the characters of the input fields.

Slightly relax the MaxSize of repo's Description and Website

If you are create a new new branch while viewing file or directory, you

get redirected to the root of the repo. With this PR, you keep your

current path instead of getting redirected to the repo root.

The `go-licenses` make task introduced in #21034 is being run on make vendor

and occasionally causes an empty go-licenses file if the vendors need to

change. This should be moved to the generate task as it is a generated file.

Now because of this change we also need to split generation into two separate

steps:

1. `generate-backend`

2. `generate-frontend`

In the future it would probably be useful to make `generate-swagger` part of `generate-frontend` but it's not tolerated with our .drone.yml

Ref #21034

Signed-off-by: Andrew Thornton <art27@cantab.net>

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: delvh <dev.lh@web.de>

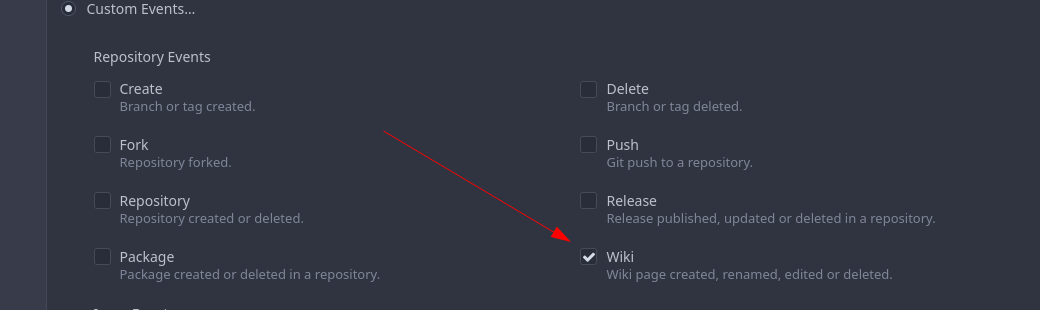

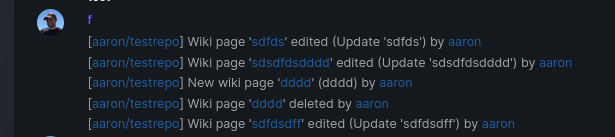

Add support for triggering webhook notifications on wiki changes.

This PR contains frontend and backend for webhook notifications on wiki actions (create a new page, rename a page, edit a page and delete a page). The frontend got a new checkbox under the Custom Event -> Repository Events section. There is only one checkbox for create/edit/rename/delete actions, because it makes no sense to separate it and others like releases or packages follow the same schema.

The actions itself are separated, so that different notifications will be executed (with the "action" field). All the webhook receivers implement the new interface method (Wiki) and the corresponding tests.

When implementing this, I encounter a little bug on editing a wiki page. Creating and editing a wiki page is technically the same action and will be handled by the ```updateWikiPage``` function. But the function need to know if it is a new wiki page or just a change. This distinction is done by the ```action``` parameter, but this will not be sent by the frontend (on form submit). This PR will fix this by adding the ```action``` parameter with the values ```_new``` or ```_edit```, which will be used by the ```updateWikiPage``` function.

I've done integration tests with matrix and gitea (http).

Fix#16457

Signed-off-by: Aaron Fischer <mail@aaron-fischer.net>

A testing cleanup.

This pull request replaces `os.MkdirTemp` with `t.TempDir`. We can use the `T.TempDir` function from the `testing` package to create temporary directory. The directory created by `T.TempDir` is automatically removed when the test and all its subtests complete.

This saves us at least 2 lines (error check, and cleanup) on every instance, or in some cases adds cleanup that we forgot.

Reference: https://pkg.go.dev/testing#T.TempDir

```go

func TestFoo(t *testing.T) {

// before

tmpDir, err := os.MkdirTemp("", "")

require.NoError(t, err)

defer os.RemoveAll(tmpDir)

// now

tmpDir := t.TempDir()

}

```

Signed-off-by: Eng Zer Jun <engzerjun@gmail.com>

When migrating add several more important sanity checks:

* SHAs must be SHAs

* Refs must be valid Refs

* URLs must be reasonable

Signed-off-by: Andrew Thornton <art27@cantab.net>

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: techknowlogick <matti@mdranta.net>

There are a lot of go dependencies that appear old and we should update them.

The following packages have been updated:

* codeberg.org/gusted/mcaptcha

* github.com/markbates/goth

* github.com/buildkite/terminal-to-html

* github.com/caddyserver/certmagic

* github.com/denisenkom/go-mssqldb

* github.com/duo-labs/webauthn

* github.com/editorconfig/editorconfig-core-go/v2

* github.com/felixge/fgprof

* github.com/gliderlabs/ssh

* github.com/go-ap/activitypub

* github.com/go-git/go-git/v5

* github.com/go-ldap/ldap/v3

* github.com/go-swagger/go-swagger

* github.com/go-testfixtures/testfixtures/v3

* github.com/golang-jwt/jwt/v4

* github.com/klauspost/compress

* github.com/lib/pq

* gitea.com/lunny/dingtalk_webhook - instead of github.com

* github.com/mattn/go-sqlite3

* github/matn/go-isatty

* github.com/minio/minio-go/v7

* github.com/niklasfasching/go-org

* github.com/prometheus/client_golang

* github.com/stretchr/testify

* github.com/unrolled/render

* github.com/xanzy/go-gitlab

* gopkg.in/ini.v1

Signed-off-by: Andrew Thornton <art27@cantab.net>

* fix hard-coded timeout and error panic in API archive download endpoint

This commit updates the `GET /api/v1/repos/{owner}/{repo}/archive/{archive}`

endpoint which prior to this PR had a couple of issues.

1. The endpoint had a hard-coded 20s timeout for the archiver to complete after

which a 500 (Internal Server Error) was returned to client. For a scripted

API client there was no clear way of telling that the operation timed out and

that it should retry.

2. Whenever the timeout _did occur_, the code used to panic. This was caused by

the API endpoint "delegating" to the same call path as the web, which uses a

slightly different way of reporting errors (HTML rather than JSON for

example).

More specifically, `api/v1/repo/file.go#GetArchive` just called through to

`web/repo/repo.go#Download`, which expects the `Context` to have a `Render`

field set, but which is `nil` for API calls. Hence, a `nil` pointer error.

The code addresses (1) by dropping the hard-coded timeout. Instead, any

timeout/cancelation on the incoming `Context` is used.

The code addresses (2) by updating the API endpoint to use a separate call path

for the API-triggered archive download. This avoids producing HTML-errors on

errors (it now produces JSON errors).

Signed-off-by: Peter Gardfjäll <peter.gardfjall.work@gmail.com>

The recovery, API, Web and package frameworks all create their own HTML

Renderers. This increases the memory requirements of Gitea

unnecessarily with duplicate templates being kept in memory.

Further the reloading framework in dev mode for these involves locking

and recompiling all of the templates on each load. This will potentially

hide concurrency issues and it is inefficient.

This PR stores the templates renderer in the context and stores this

context in the NormalRoutes, it then creates a fsnotify.Watcher

framework to watch files.

The watching framework is then extended to the mailer templates which

were previously not being reloaded in dev.

Then the locales are simplified to a similar structure.

Fix#20210Fix#20211Fix#20217

Signed-off-by: Andrew Thornton <art27@cantab.net>

Some Migration Downloaders provide re-writing of CloneURLs that may point to

unallowed urls. Recheck after the CloneURL is rewritten.

Signed-off-by: Andrew Thornton <art27@cantab.net>

Some repositories do not have the PullRequest unit present in their configuration

and unfortunately the way that IsUserAllowedToUpdate currently works assumes

that this is an error instead of just returning false.

This PR simply swallows this error allowing the function to return false.

Fix#20621

Signed-off-by: Andrew Thornton <art27@cantab.net>

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: Lauris BH <lauris@nix.lv>